Introduction

Machine Learning involves training algorithms to learn from data and make predictions or decisions without being explicitly programmed. It encompasses various techniques such as supervised learning, unsupervised learning, and reinforcement learning, and is used in applications like recommendation systems, fraud detection, and image recognition.

Supervised Learning:

Supervised learning is a type of machine learning where an algorithm is trained on labeled data, meaning the training data includes both the input features and the correct output. The goal is to learn a mapping from inputs to outputs so that the model can make accurate predictions on new, unseen data.

The process of supervised learning involves several key steps:

Data Collection:

Collect a dataset that includes input-output pairs. For example, in a supervised learning task for image classification, the dataset might include images (inputs) and their corresponding labels (outputs).

Data Preprocessing:

Clean and prepare the data for training. This might involve handling missing values, normalizing numerical features, encoding categorical variables, and splitting the dataset into training and testing subsets.

Model Selection:

Choose a suitable machine learning algorithm for the task. Common algorithms include linear regression for continuous outputs, logistic regression for binary classification, and decision trees or neural networks for more complex tasks.

Training:

Use the labeled data to train the model. The algorithm learns by adjusting its parameters to minimize the error between its predictions and the actual labels in the training data. This is done using a process called optimization, which iteratively updates the model to improve its accuracy.

Evaluation:

After training, the model is evaluated using a separate set of data that was not used during training (the test set). This helps assess how well the model generalizes to new, unseen data. Common evaluation metrics include accuracy, precision, recall, and F1 score, depending on the type of problem.

Tuning:

Based on the evaluation results, the model may need adjustments. This can involve tuning hyperparameters (settings that control the learning process) or refining the feature set.

Deployment:

Once the model performs satisfactorily, it can be deployed to make predictions on new data. This is where the model’s ability to generalize to real-world situations is put to the test.

Unsupervised Learning

Unsupervised learning is a type of machine learning where the algorithm is trained on data without explicit labels or outcomes. Unlike supervised learning, where the model learns from labeled data (input-output pairs), unsupervised learning seeks to identify patterns, structures, or relationships within the data without prior knowledge of the outcomes. This approach is particularly useful for exploring data and discovering hidden insights.

Here’s a detailed overview of unsupervised learning:

Unsupervised learning is a type of machine learning where the algorithm is trained on data without explicit labels or outcomes. Unlike supervised learning, where the model learns from labeled data (input-output pairs), unsupervised learning seeks to identify patterns, structures, or relationships within the data without prior knowledge of the outcomes. This approach is particularly useful for exploring data and discovering hidden insights.

Here’s a detailed overview of unsupervised learning:

Data Collection:

Gather a dataset that includes only the input features, with no corresponding output labels. For example, in a clustering task, you might have customer data with features like age, income, and purchase history, but no predefined categories or labels.

Data Preprocessing:

Clean and prepare the data by handling missing values, scaling features, and possibly reducing dimensionality. Preprocessing is crucial to ensure that the data is suitable for analysis and that any patterns discovered are meaningful.

Model Selection:

Choose an appropriate unsupervised learning algorithm based on the nature of the problem and the type of insights desired. Common algorithms include:

- Clustering Algorithms: These group similar data points together. Examples include K-means clustering, hierarchical clustering, and DBSCAN. Clustering can help identify natural groupings within the data, such as segmenting customers into distinct groups based on purchasing behavior.

- Dimensionality Reduction Algorithms: These reduce the number of features while retaining essential information. Techniques such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are used to simplify complex datasets, making them easier to visualize and analyze.

- Association Rule Learning: This identifies relationships between variables. For instance, market basket analysis can reveal which products are frequently bought together, helping retailers optimize product placements and promotions.

Model Training:

Apply the chosen algorithm to the data to discover patterns, clusters, or associations. Unlike supervised learning, there is no explicit training phase with labeled outcomes. Instead, the model identifies structures or relationships based solely on the input data.

Evaluation:

Assess the results of the unsupervised learning process. Since there are no ground truth labels, evaluation involves interpreting the discovered patterns and verifying their relevance. For clustering, evaluation might include visual inspection of the clusters or using metrics like silhouette score to assess cluster quality. For dimensionality reduction, it might involve analyzing how well the reduced features capture the essential information.

Insights and Interpretation:

Analyze the results to draw meaningful conclusions. For example, clustering might reveal distinct customer segments that can inform targeted marketing strategies, while association rules might uncover interesting patterns in purchasing behavior.

Deployment:

Depending on the insights gained, you might use the findings to make data-driven decisions or guide further analysis. For instance, the identified customer segments could be used to tailor marketing efforts, or dimensionality reduction might be employed to improve the performance of other machine learning models by reducing complexity.

Unsupervised learning is valuable because it can handle scenarios where labeled data is not available or is difficult to obtain. It helps uncover hidden structures and relationships in data, making it ideal for exploratory data analysis, anomaly detection, and feature extraction. However, it also has limitations, such as the lack of clear evaluation criteria and potential difficulty in interpreting the results.

Semi-Supervised Learning

Semi-supervised learning is a machine learning approach that combines aspects of both supervised and unsupervised learning. It leverages a small amount of labeled data alongside a larger pool of unlabeled data to improve model performance. This approach is particularly useful when obtaining labeled data is expensive or time-consuming, but unlabeled data is more readily available.

Here’s a detailed breakdown of semi-supervised learning:

Semi-supervised learning is a machine learning approach that combines aspects of both supervised and unsupervised learning. It leverages a small amount of labeled data alongside a larger pool of unlabeled data to improve model performance. This approach is particularly useful when obtaining labeled data is expensive or time-consuming, but unlabeled data is more readily available.

Here’s a detailed breakdown of semi-supervised learning:

Data Collection:

In semi-supervised learning, the dataset consists of two parts:

- Labeled Data: A small set of data where each input is paired with a known output label. For example, in a classification task, this might include a few thousand labeled images with corresponding categories.

- Unlabeled Data: A larger set of data where the outputs are not known. This could include millions of images without labels.

Data Preprocessing:

As with other machine learning methods, preprocessing is crucial. This involves cleaning the data, handling missing values, and normalizing or standardizing features. Proper preprocessing ensures that both labeled and unlabeled data are prepared for the learning process.

Model Selection:

Choose an algorithm that can effectively utilize both labeled and unlabeled data. Common approaches include:

- Self-Training: The model is initially trained on the labeled data. It then makes predictions on the unlabeled data, and the most confident predictions are added to the labeled set to retrain the model. This iterative process continues until the model’s performance stabilizes.

- Co-Training: This approach involves training multiple models on different views or subsets of the data. Each model is used to label the unlabeled data, and these labels are then used to retrain the models. The idea is that the models can help each other improve by providing additional labels.

- Generative Models: Techniques like Gaussian Mixture Models or Variational Autoencoders can be used to model the underlying distribution of the data. They can incorporate unlabeled data to learn the structure of the input space, which helps improve classification performance.

Model Training:

The training process involves using both labeled and unlabeled data to learn the underlying patterns. The model first learns from the labeled data and then uses the insights gained to interpret the unlabeled data. Techniques like consistency regularization or pseudo-labeling are often employed to ensure that the model’s predictions on unlabeled data are reliable.

Evaluation:

Evaluate the model’s performance using a separate validation set, which may include some of the labeled data that was not used during training. Common evaluation metrics include accuracy, precision, recall, and F1 score, depending on the nature of the task.

Tuning:

Adjust the model’s parameters and the ratio of labeled to unlabeled data to optimize performance. Hyperparameter tuning and experimenting with different proportions of labeled data can help enhance the model’s accuracy and generalization capabilities.

Deployment:

Once the model is trained and evaluated, it can be deployed to make predictions on new, unseen data. The insights gained from the semi-supervised approach can also be used to refine the model further or to inform decision-making processes.

Semi-supervised learning is advantageous because it makes use of unlabeled data, which is often abundant and inexpensive to collect. It can lead to better generalization and improved performance compared to supervised learning, especially when labeled data is scarce. However, challenges include ensuring the quality of the pseudo-labels generated and the potential for the model to propagate errors from the unlabeled data.

Reinforcement Learning:

Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent aims to maximize cumulative rewards through trial and error, using feedback from its actions to improve its strategy over time. Unlike supervised learning, where models are trained on labeled data, reinforcement learning involves learning from the consequences of actions taken within a dynamic environment.

Here’s a detailed explanation of reinforcement learning:

Agent and Environment:

In reinforcement learning, the system is divided into two main components:

- Agent: The entity that makes decisions and takes actions. The agent’s goal is to maximize its cumulative reward.

- Environment: The external system with which the agent interacts. The environment responds to the agent’s actions and provides feedback in the form of rewards or penalties.

State and Action:

The agent operates within a defined state space and action space:

- State: Represents the current situation or configuration of the environment. For example, in a game, the state might include the position of all players and obstacles.

- Action: The set of possible moves or decisions the agent can make. Actions alter the state of the environment and influence future states and rewards.

Reward Signal:

The environment provides feedback to the agent through rewards. A reward is a numerical value that evaluates the desirability of the agent’s actions. The goal of the agent is to maximize the total accumulated reward over time. Positive rewards encourage actions that lead to desirable outcomes, while negative rewards (penalties) discourage actions that lead to undesirable results.

Policy:

The policy is a strategy or rule that the agent uses to determine its actions based on the current state. It can be deterministic (a specific action for each state) or stochastic (a probability distribution over actions). The policy guides the agent’s decision-making process and is central to the learning process.

Value Function:

The value function estimates the expected cumulative reward for a given state or state-action pair. It helps the agent evaluate the long-term benefits of being in a particular state or taking a specific action. There are two main types of value functions:

- State Value Function (V(s)): Estimates the expected reward of being in state sss and following a certain policy.

- Action Value Function (Q(s, a)): Estimates the expected reward of taking action aaa in state sss and then following the policy.

Learning Process:

The agent learns through interactions with the environment, using algorithms to improve its policy. Common reinforcement learning algorithms include:

- Q-Learning: An off-policy algorithm that learns the action value function Q(s,a)Q(s, a)Q(s,a) to determine the best action for each state.

- SARSA (State-Action-Reward-State-Action): An on-policy algorithm that updates the action value function based on the current policy.

- Deep Q-Networks (DQN): Combines Q-learning with deep neural networks to handle high-dimensional state spaces, such as images in video games.

- Policy Gradient Methods: Directly optimize the policy by estimating the gradient of expected rewards with respect to the policy parameters.

Exploration vs. Exploitation:

A key challenge in reinforcement learning is balancing exploration (trying new actions to discover their effects) with exploitation (choosing actions that are known to yield high rewards). Effective RL strategies incorporate mechanisms to balance these aspects, such as ε-greedy policies or more sophisticated exploration techniques.

Applications:

Reinforcement learning is widely used in various fields, including robotics (for autonomous control and manipulation), game playing (e.g., AlphaGo), finance (for portfolio management), and autonomous vehicles (for navigation and decision-making).

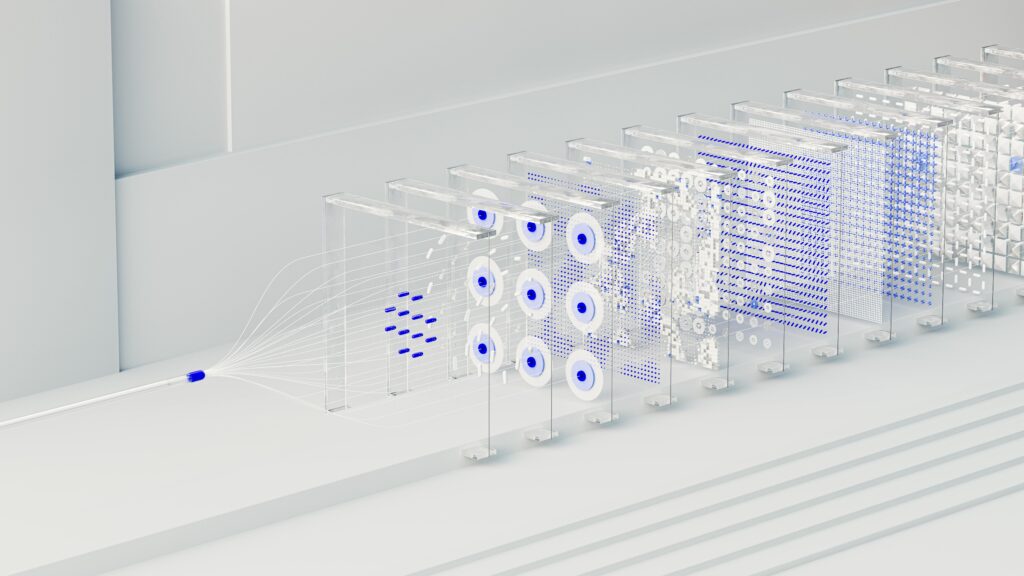

Deep Learning:

Deep learning is a subset of machine learning that involves training artificial neural networks with many layers, known as deep neural networks, to model complex patterns and representations in data. It has revolutionized fields such as computer vision, natural language processing, and speech recognition due to its ability to automatically learn features from large amounts of data.

Here’s a comprehensive overview of deep learning:

Neural Networks:

At the core of deep learning is the artificial neural network, inspired by the structure and function of the human brain. A neural network consists of layers of interconnected nodes (neurons), where each node processes input data and passes its output to the next layer. The basic types of layers include:

- Input Layer: Accepts the raw data, such as images or text.

- Hidden Layers: Intermediate layers where data is processed through weighted connections. Deep networks have multiple hidden layers, hence the term “deep” learning.

- Output Layer: Produces the final prediction or classification result.

Activation Functions:

Each neuron applies an activation function to the weighted sum of its inputs to introduce non-linearity into the model. Common activation functions include:

- ReLU (Rectified Linear Unit): ReLU(x)=max(0,x)\text{ReLU}(x) = \max(0, x)ReLU(x)=max(0,x), which helps mitigate the vanishing gradient problem and allows for faster training.

- Sigmoid: Sigmoid(x)=11+e−x\text{Sigmoid}(x) = \frac{1}{1 + e^{-x}}Sigmoid(x)=1+e−x1, often used for binary classification tasks.

- Tanh: Tanh(x)=ex−e−xex+e−x\text{Tanh}(x) = \frac{e^x – e^{-x}}{e^x + e^{-x}}Tanh(x)=ex+e−xex−e−x, which is useful for modeling data with a zero-centered distribution.

Training Deep Networks:

Training deep learning models involves adjusting the weights of connections between neurons to minimize the error between the predicted output and the actual target values. This is typically done using a process called backpropagation in conjunction with optimization algorithms:

- Backpropagation: An algorithm used to compute the gradient of the loss function with respect to each weight by applying the chain rule. It helps in updating the weights to reduce the error.

- Optimization Algorithms: Methods like Gradient Descent and its variants (e.g., Stochastic Gradient Descent, Adam) are used to adjust the weights based on the computed gradients.

Loss Function:

The loss function measures the difference between the model’s predictions and the actual target values. Common loss functions include:

- Cross-Entropy Loss: Used for classification tasks, measures the performance of a classification model whose output is a probability value between 0 and 1.

- Mean Squared Error (MSE): Used for regression tasks, it measures the average squared difference between predicted and actual values.

Regularization Techniques:

To prevent overfitting (where the model learns noise instead of the underlying pattern), several regularization techniques are employed:

- Dropout: Randomly drops neurons during training to prevent the network from becoming too reliant on specific neurons.

- L1/L2 Regularization: Adds a penalty to the loss function based on the magnitude of the weights, encouraging simpler models.

Architectures:

Deep learning encompasses various network architectures tailored for different tasks:

- Convolutional Neural Networks (CNNs): Specialized for image processing, they use convolutional layers to detect spatial hierarchies in images.

- Recurrent Neural Networks (RNNs): Designed for sequential data, such as time series or text, they maintain hidden states that capture temporal dependencies.

- Transformers: A recent architecture used in natural language processing, which relies on self-attention mechanisms to process sequences of data in parallel.

Applications:

Deep learning has transformative applications across various domains:

- Computer Vision: Object detection, image classification, and facial recognition.

- Natural Language Processing: Machine translation, sentiment analysis, and text generation.

- Speech Recognition: Converting spoken language into text and voice assistants.

Transfer Learning:

Transfer learning is a machine learning technique where a model trained on one task is adapted and fine-tuned to perform a different but related task. This approach leverages the knowledge acquired from one domain or problem to improve learning efficiency and performance in another domain. Transfer learning is particularly useful when there is limited labeled data available for the target task but ample data for a related source task.

Here’s a detailed overview of transfer learning:

Concept of Transfer Learning:

The core idea behind transfer learning is to utilize a pre-trained model on a source task to jump-start the learning process on a target task. By doing so, the model can benefit from the patterns and features learned from the source data, which can accelerate training and improve performance on the target task.

Pre-trained Models:

Transfer learning often involves using models that have already been trained on large datasets for a general task. For instance:

- Image Classification: Models like VGG, ResNet, and Inception, which have been trained on large image datasets like ImageNet, can be adapted for specific image classification tasks.

- Natural Language Processing (NLP): Models such as BERT, GPT, and T5, pre-trained on vast text corpora, can be fine-tuned for specific NLP tasks like sentiment analysis, question answering, or text summarization.

Fine-Tuning:

Fine-tuning is the process of adapting a pre-trained model to a new task. This involves:

- Feature Extraction: Using the features learned by the pre-trained model as input to a new model. For example, you might use the convolutional layers of a pre-trained CNN as a fixed feature extractor and train a new classifier on top.

- Fine-Tuning Layers: Adjusting the weights of some or all layers of the pre-trained model while training on the target task. This approach is useful when the target task is closely related to the source task.

Approaches to Transfer Learning:

- Inductive Transfer: When the source and target tasks are similar, but the target task has fewer labeled examples. For instance, using a model trained on general object detection to improve performance on a specific type of object detection.

- Transductive Transfer: When the source and target tasks are different but related. For example, using a model trained on image data to improve performance on a different type of image classification.

- Domain Adaptation: A special case of transfer learning where the source and target tasks are the same, but the data distributions differ. Techniques like domain adversarial training can be employed to align the source and target distributions.

Advantages of Transfer Learning:

- Reduced Training Time: Transfer learning can significantly shorten training time since the model starts with pre-learned features and patterns.

- Improved Performance: Leveraging pre-trained models often results in better performance, especially when the target dataset is small or lacks diversity.

- Less Data Requirement: Transfer learning is beneficial when labeled data is scarce, as it allows the model to utilize knowledge gained from a larger, related dataset.

Challenges and Considerations:

- Domain Mismatch: If the source and target domains are very different, transfer learning may not be effective. The pre-trained features might not generalize well to the target domain.

- Overfitting: Fine-tuning on a small target dataset might lead to overfitting. Regularization techniques and careful fine-tuning are essential to mitigate this risk.

- Model Compatibility: Ensuring that the architecture of the pre-trained model is compatible with the target task is crucial.

Applications:

- Medical Imaging: Adapting models trained on general images to specific medical imaging tasks, such as tumor detection.

- Speech Recognition: Using models pre-trained on general speech data to enhance performance on specific accents or languages.

- Recommendation Systems: Applying models trained on general user behavior to specific domains, such as movie or product recommendations.

If you are not redirected automatically, follow this link.